Twin Ready Manufacturing- Preparing Processes, People, and Products

Digital twins are often dismissed as expensive, complex, and hard to sustain. That perception is not about the technology. It is about readiness. When processes are inconsistent, data is unreliable, and ownership is unclear, even a well-built twin becomes a one time experiment instead of a capability that improves decisions.

In my previous blog, I wrote about digital twins as a decision tool and why mid market manufacturers must stay use case driven within a 6 to 12 month window. This post addresses the question I hear most often from manufacturing leaders: How do we become twin ready without turning this into a multi year transformation program?

To make this practical, I will use a real situation from my earlier work. An automotive component manufacturer had an induction hardening bottleneck with manual processes, safety constraints, and prior automation disappointment. We used a digital twin to validate the automation design before deployment, selected a SCARA robot, and improved efficiency by more than 20 percent.

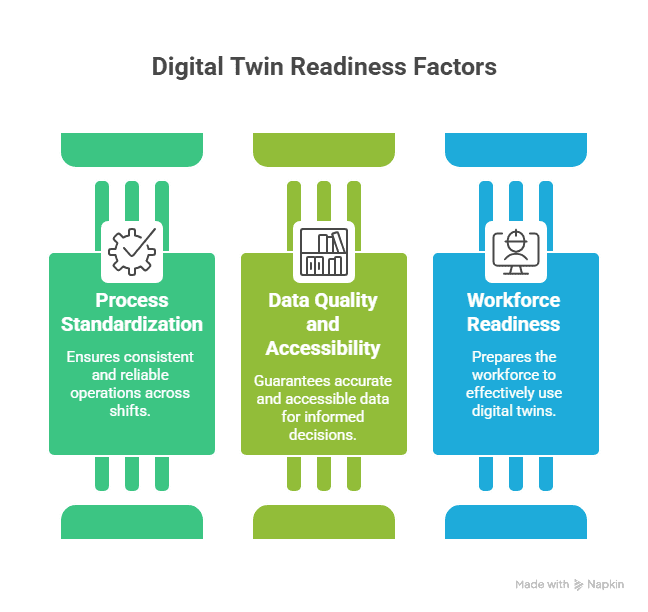

What “twin ready” means in manufacturing terms

Twin ready does not mean you bought a platform. It means your organization can keep a model aligned to reality, so the outputs are trusted enough to drive decisions.

I define twin readiness across three pillars. I will show each pillar through the induction hardening example.

Pillar 1- Process standardization

If the process is inconsistent, the twin becomes a one time engineering artifact.

Twin ready signals

Work is defined consistently across shifts

Downtime, changeovers, scrap, and rework are recorded the same way

Approval points for changes are clear during build and launch

In the Induction hardening example, the bottleneck was not only the machine. It was the variability created by manual handling and shift execution. Standardizing the load and unload sequence, exception handling, and hand-off rules was a prerequisite to trusting the predicted throughput.

Pillar 2- Data quality and accessibility

A twin needs good enough data that connects design intent to production behavior.

Twin ready signals

Consistent identifiers for part, revision, and operation

Actual cycle time and downtime reasons captured for the scoped process

Basic method to capture quality outcomes and causes

In the induction hardening example, We focused on a narrow data spine needed for the decision: cycle time, handling time, downtime patterns, safety constraints, and quality checks impacted by automation.

Pillar 3- Workforce readiness

A twin only creates value when production, manufacturing engineering, quality, and maintenance use it to answer practical questions.

Twin ready signals

Clear ownership for keeping the model current

Training focused on decisions, not dashboards

A pathway for operators and maintenance to challenge assumptions

In the induction hardening example, concerns about job skills, maintenance complexity, and prior failures surfaced constraints early. That input improved the design before money was spent on hardware.

Integration points and what to connect first

Manufacturers stall when they try to connect everything. Anchor integration to one decision, then connect only what improves that decision.

Design and engineering

Connect when the question is: Will this design and process work as expected

CAD geometry and interfaces

Critical characteristics and inspection requirements

Revision history that affects fit, cycle time, or quality

In our project, the part geometry and material informed the decisions for end effector, robot selection and pallet designs.

Manufacturing execution

Connect when the question is: How will this behave under real mix and schedule

Routing and work definitions

Actual cycle times and downtime causes

Exception handling

The model forced clarity on material flow, work steps, and exception handling, which then informed MES logic and operator routines.

Maintenance

Connect when the question is: Can we sustain performance and uptime

Asset hierarchy and critical spares

Failure patterns and mean time to repair

Preventive maintenance compliance for scoped equipment

The automation cell design was influenced by the maintenance team's input on why previous automation failed and what they needed to maintain to high production standards.

Common pitfalls when manufacturers start too early

Building a model before defining the decision it must improve

Expecting the twin to impose process discipline without standard work

Allowing revisions and routing to drift, making the twin obsolete

Over investing in integration before proving one measurable outcome

Chasing high fidelity instead of building credibility and adoption

Induction hardening lesson: The twin worked because it prevented physical rework by resolving constraints before deployment.

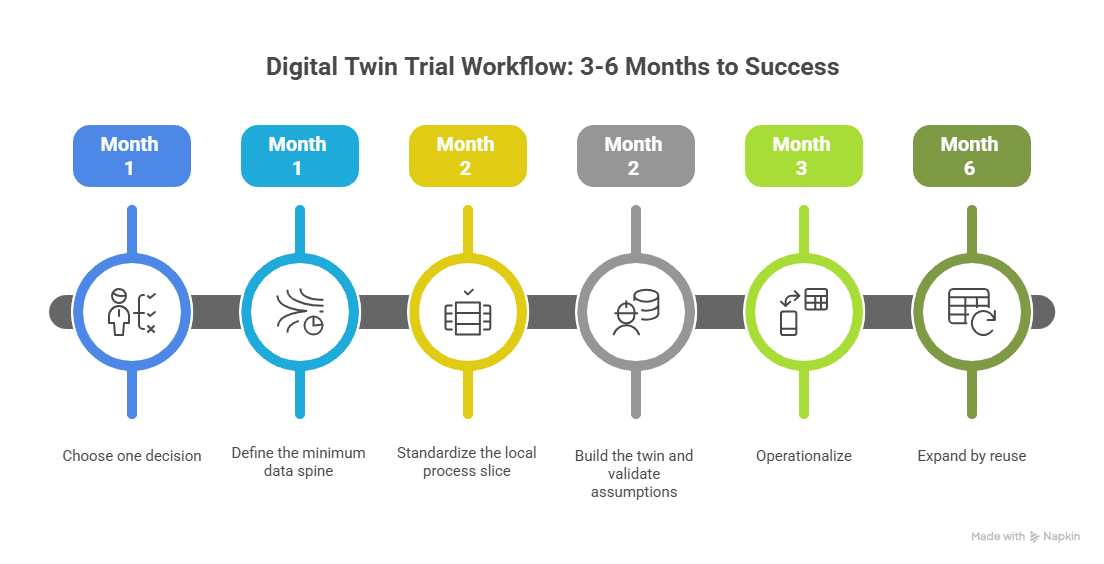

Workflow to trial a digital twin (3-6 months)

This workflow is designed to deliver one credible use case and build reusable foundations.

Step 1: Choose one decision

Pick one decision already costing time, margin, or customer confidence.

Examples:

Automation cell design and commissioning risk

NPI ramp stability for the first 8 to 12 weeks

Bottleneck capacity recovery without new equipment

Repeat warranty or service failure on a shipped platform

Step 2: Define the minimum data spine

Create a short list of data required for that decision:

Part and revision identification rule

Operation steps at the level needed for modeling

Cycle time, downtime, and quality outcomes for the scoped area

Step 3: Standardize the local process slice

Standardize only what touches the use case:

Work definition and exception rules

Cause codes that people will actually use

A clear rule for what changes require review

Step 4: Build the twin and validate assumptions

Build the simplest twin that answers the decision:

Simulation twin for layout, reach, interference, cycle time risk

Process twin for NPI ramp or staffing and WIP constraints

Performance twin for a bottleneck schedule and batching rules

Step 5: Operationalize

Weekly review with production, quality, and maintenance

One person accountable for data refresh and model updates

One leader accountable for acting on outputs

Step 6: Expand by reuse

Expand only after the first measurable win:

More variants of the same product family

More assets in the same line

One additional use case that reuses the same data spine

This mirrors your broader transformation principle of starting small, proving value, and scaling based on real impact.

Outcome metrics leaders will recognize and can measure

Choose metrics that both manufacturing and finance teams already track, and that a twin can influence within the scoped use case:

Capacity released on a constrained asset (hours per week or units per shift)

On time delivery for the scoped product family

WIP days or inventory days in the scoped flow

These map to operations reality and working capital impact, and they can usually be measured with existing ERP, MES, and basic production logs.

If you are considering digital twins, start with readiness, not software. I offer a Twin Ready Evaluation that identifies your best first use case, the minimum process and data spine required, and a 90 to 120 day execution plan tied to measurable outcomes. If you want to discuss your first twin use case, write to me.Related reading:Digital Twins for Competitive Advantage

Related reading:Leveraging Digital Twins for Efficient Automation